Waterloo Fractal Coding and Analysis Group

(formerly known as the "Waterloo Fractal Compression Project")

The Waterloo Fractal Coding and Analysis Group (which originated from the "Waterloo Fractal Compression Project" that used to be located at this website) is comprised of five researchers who have been interested in various aspects of fractal analysis including: iterated function systems, fractal image coding, generalized fractal transforms and the inverse problem of approximation using fixed points of contraction mappings. The "fractalators" of this group are

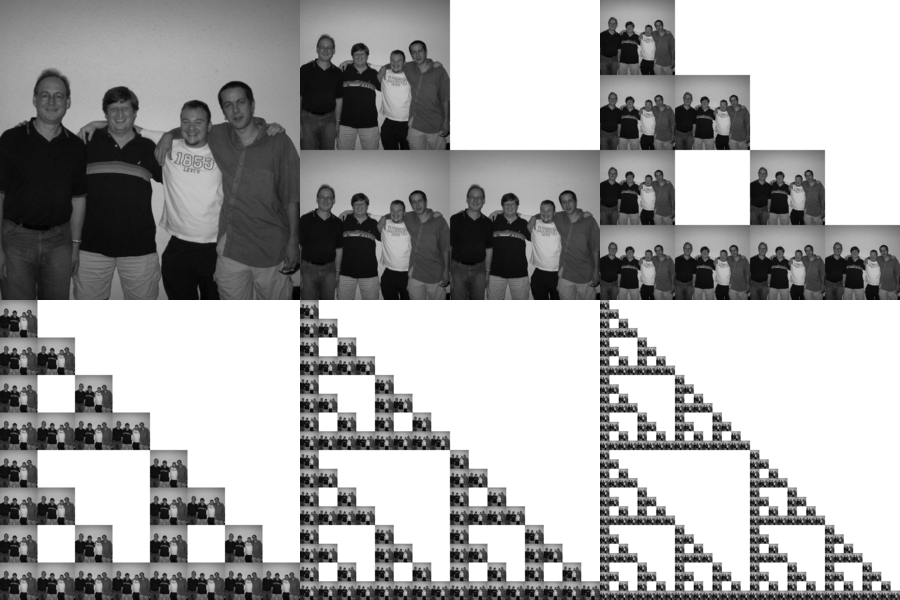

The first four "fractalators" listed above are pictured below. This photo was taken during an

"IFS brainstorming session" at Waterloo in 2006:

Ed Vrscay, Herb Kunze, Davide La Torre, Franklin Mendivil, Ed Vrscay, Herb Kunze .... (Photo and graphical effects courtesy of Franklin Mendivil)

For a brief history of the Waterloo Fractal Compression Project and its evolution to our present group, please click here

Iterated Function Systems (IFS)

IFS is the term originally devised by Michael Barnsley and Steven Demko [1] for a collection of contraction mappings over a complete metric space, typically compact subsets of R^n. Systems of contraction mappings had been considered previously by a number authors for various purposes. But the landmark papers of John Hutchinson [2] and, independently, Barnsley and Demko [1] showed how such systems of mappings with associated probabilities could be used to construct fractal sets and measures: the former from a geometric measure theory setting and the latter from a probabilistic setting. For those who are not familiar with IFS, we'll provide a simple discussion and example a little later in this section.

Just as important, however, was the fact that the Barnsley/Demko paper was the first to suggest that IFS could be used to approximate natural objects. This was the seed of the inverse problem of fractal approximation: Given a "target" set, S, for example, a leaf, can we find an IFS with attractor A (see below) that approximates S to a reasonable degree.

In a subsequent paper, Barnsley and students [3] showed how the inverse problem of fractal approximation was could be reformulated by means of the infamous "Collage Theorem": instead of trying to find an IFS whose attractor A would match the target S (a very tedious and difficult problem), one can look for an IFS that maps A as close as possible to itself. More on this later.

Fractal image coding

After [3], the next natural question was: "Can we use IFS to approximate images?" The seminal paper [4] by A. Jacquin, then a Ph.D. student of Barnsley at Georgia Tech, provided the basis of block-based fractal image coding which is still used today. Jacquin's paper launched an intensive activity in fractal image compression [5-7]. Indeed, in the meantime, Barnsley left Georgia Tech to found the company Iterated Systems which was dedicated to the commercialization of fractal image compression.

In fractal image coding, one tries again to approximate a "target" image y with the fixed point x' of a contractive fractal transform T. In general, however, this direct inverse problem is difficult because of the complex nature of the fractal transform, which maps modified subimages onto other regions. A great simplification is afforded by the Collage Theorem of Ref. [3] above. In collage coding, one looks for a transform T that minimizes the so-called collage distance d(y,Ty). Most, if not all, fractal image coding methods rely on collage coding.

Very briefly, block-based fractal image coding is a "local IFS" procedure. Given an image I, one typically seeks to approximate its subimages on n x n range subblocks R_i with contracted (i.e., decimated) and greyscale-modified subimages on 2n x 2n domain subblocks D_j. For each range block, one searches for the best domain block D_i from a domain pool. The larger the domain pool, the better opportunity for a good approximation, but at the expense of higher search times.

Below is an example of fractal image coding as applied to the infamous Lena image:

Fractal compression methods were actually quite competitive until the appearance of powerful wavelet-based methods and, subsequently, context-based coders. Nevertheless, it has always been the philosophy of the Waterloo research programme that fractal-based methods are interesting mathematically and that they may also be able to provide useful information about images. We are indeed finding that there is much "life after compression".

A simple introduction to IFS

Suppose that w : X -> X is a contraction mapping of a complete metric space (X,d) to itself. Then, by the celebrated Banach Fixed Point Theorem, there is a unique point x' in X such that w(x') = x', the fixed point of w. Moreover, for any starting point x_0 in X, the sequence of points {x_n} defined by the iteration procedure x_{n+1}=w(x_n) converges to x' as n -> infinity. In other words, x' is an attractive fixed point .

Now suppose that we have more than one contraction mapping on X, i.e. w_i : X -> X, i=1,...N. Each of these maps w_i will have its own fixed point x'_i. And, of course, if we apply only one of these maps repeatedly, say w_P, then the iteration sequence {x_n} will converge to its fixed point x'_P. But what happens if you pick the maps at random? Clearly, each map will be fighting to bring the sequence to its own fixed point!

Assuming that you pick each map w_i with a nonzero probability p_i (sum of p_i = 1), the sequence will eventually approach a unique set, the "attractor" A of the "iterated function system" W = {w_1, ... , w_N}, performing a random walk over it (or at least getting nearer and nearer to it). The probabilities will play a role in determining how often various regions of the attractor are visited.

Examples on [0,1]:

w_1(x) = x/3, w_2(x) = x/3 + 2/3. Note that w_1(0)=0 and w_2(1)=1. Then the attractor of this IFS is the classical Cantor set on [0,1].

An example in [0,1] X [0,1]:

Note: Let us go back to Franklin Mendvil's beautiful montage of photos presented above. Starting at the upper left photo (which could be considered as the "seed" set), if you scan left-to-right and then move down and scan left-to-right again, you have the first six "sets" that are approaching this "rectangular" Sierpinski gasket.

For a little more discussion on this subject, along with a quite readable introduction to fractal image coding, you may wish to look at E.R. Vrscay's Hitchhiker's Guide to Fractal Image Compression. It was written for a general audience with some undergraduate mathematical knowledge. Ref. [8] below is also a very nice and readable discussion of fractal image coding.

References

The most popular "fractal-based" algorithms for both the representation representation as well as the compression of computer images have involved some implementation of the method of Iterated Function Systems (IFS) on complete metric spaces, e.g. IFS with probabilities (IFSP), Iterated Fuzzy Set Systems (IFZS), Fractal Transforms (FT), the Bath Fractal Transform (BFT) and IFS with grey-level maps (IFSM). (FT and BFT are special cases of IFSM.) The "IFS component" of these methods is a set of N contraction maps {w_1, w_2,...,w_N}, w_i : X -> X, over a complete metric space (X,d), the "base space" which represents the computer screen.

The major developments of the research at Waterloo up to roughly 2000 were:

Much of the above is summarized in the following paper:

J. Elton originally proved that the "chaos game" for an IFS with probabilities was ergodic. Here, we use the additional fact that the invariant measure is unique to provide a simpler proof:

After a kind of hiatus, we have returned to fractal image coding (FIC) to determine whether it can

Recall that FIC is a "local IFS" procedure involving the approximation of image range subblocks by greyscale-modified subimages that are supported on larger domain subblocks.

We have been able to show that fractal coding can work remarkably well to denoise images. We have also shown that such denoising can also be accomplished in the wavelet domain [6,20] using the fractal-wavelet transform. Much of this work is to be found in the Ph.D. thesis of M. Ghazel:

The success of fractal denoising - and indeed fractal image compression methods in general - is based upon the important fact that, in general, even in the presence of noise, a significant number of domain subblocks will approximate a given range subblock almost as well as the optimal domain subblock selected by collage coding. It appears that this property, perhaps implicitly assumed, has never been investigated in detail until now. This work formed a part of the Ph.D. thesis of S.K. Alexander.

In his Ph.D. thesis work, D. Piche investigated the fractal-wavelet coding of images using nonseparable Haar wavelet basis functions that were constructed over complex tilings, following the procedure of Grochenig and Madych.

Consider the evolution equation u_t = u - Tu, where T is a contraction mapping. Then u -> u' as t -> infinity, where u'=Tu' is the unique fixed point of T. (To the best of our knowledge, no study or use of such a seemingly inocuous evolution equation has appeared in the literature.) If T is a (nonlocal) fractal transform operator, then the above represents a continuous evolution toward the attractor/fixed point of T. If we use the Euler forward method to discretize/differentiate in time, then when the timestep h=1, we have the usual iteration method of fractal coding.

Nonlocal ODEs/PDEs have been studied in a number of theoretical contexts, but not in imaging.

We show how fractal image coding can be viewed and generalized in terms of the method of projections onto convex sets (POCS). In this approach, the fractal code defines a set of spatial domain similarity constraints. We also show how such a reformulation in terms of POCS allows additional contraints to be imposed during fractal image decoding. Two applications are presented: image construction with an incomplete fractal code and image denoising.

We present a novel single-frame image zooming technique based on so-called "self-examples". Our method combines the ideas of fractal-based image zooming, example-based zooming, and nonlocal-means image denoising in a consistent and improved framework. In Bayesian terms, this example-based zooming technique targets the MMSE estimate by learning the posterior directly from examples taken from the image itself at a different scale, similar to fractal-based techniques. The examples are weighted according to a scheme introduced by Buades et al. to perform nonlocal-means image denoising. Finally, various computational issues are addressed and some results of this image zooming method applied to natural images are presented.

In the following paper we introduce a fractal-based method over (complex-valued) Fourier transforms of functions with compact support X subset R. This method of ``iterated Fourier transform systems'' (IFTS) has a natural mathematical connection with the fractal-based method of IFSM in the spatial domain. A major motivation for our formulation is the problem of resolution enhancement of band-limited magnetic resonance images. In an attempt to minimize sampling/transform artifacts, it is our desire to work directly with the raw frequency data provided by an MR imager as much as possible before returning to the spatial domain. In this paper, we show that our fractal-based IFTS method can be tailored to perform frequency extrapolation.

We define an abstract framework for self-similar vector-valued Borel measures on a compact space (X,d) based upon a formulation of IFS on such measures. This IFS method permits the construction of tangent and normal vector measures to planar fractal curves. Line integrals of smooth vector fields over planar fractal curves may then be defined. These line integrals then lead to a formulation of Green's Theorem and the Divergence Theorem for planar regions bounded by fractal curves.

The following projects represent the vision of Davide La Torre, who joined our group in 2006 (see "A brief history of our group"). We seek to formulate IFS-type operators over spaces of multifunctions, that is, set-valued functions.

D. La Torre, F. Mendivil and E.R. Vrscay, Iterated function systems on multifunctions, in Math Everywhere (Springer-Verlag, Heidelberg, 2006).

H.E. Kunze, D. La Torre and E.R. Vrscay, Contractive multifunctions, fixed-pont inclusions and iterated multifunction systems, J. Math. Anal. Appl.330, 159-173 (2007).

Most natural phenomena or the experiments that explore them are subject to small variations in the environment within which they take place. As a result, data gathered from many runs of the same experiment may well show differences that are most suitably accounted for by a model that incorporates some randomness. Differential equations with random coefficients are one such class of useful models. In this paper we consider such equations as random fixed point equations T(omega,x(omega))=x(omega), where T : omega x X -> X is a given integral operator, omega is a probability space and X is a complete metric space. We consider the following inverse problem for such equations: given a set of realizations of the fixed point of T (possibly the interpolations of different observational data sets), determine the operator T or the mean value of its random components, as appropriate. We solve the inverse problem for this class of equations by using the collage theorem.

Note: The Waterloo Fractal Compression Project used to house the infamous Waterloo BragZone and Fractals Repository which was maintained by John Kominek, Unfortunately, we are no longer supporting this facility.